Missing facts and source classifier of daily news

Introduction

With the development of the Internet, it is convenient that one can get news from network rapidly. Nonetheless, it is also dangerous since a person might have a preference for a specific news medium over others, and the news medium may have its position to report news while missing some facts that they do not want people to know. For the purpose, we propose a system to detect missing facts from a news report.

Method

Work flow

First, we group the news using their embeddings derived by Universal Sentence Encoder(USE)[1]. Within each group, the news are highly related. In fact, most of them report the same event as exptected. Meanwhile, we extract the summary for each news report using an algorithm called PageRank. Then, we further summarize each news group using the summaries of the news in the group. Afterwards, we compare the summary of each news report with the group summary to get the missing facts of the report.

Grouping news

This part is implemented by Yu-An, Wang, and I omit it here.

PageRank

For each news report, we split the raw content into sentences. Then we count the similarity of each sentence pair using USE and editdistance. Once a similarity matrix is ready, we can sort the sentences by PageRank. We then choose top k sentences to be the report’s summary, where k is a hyperparameter.

Combining the summaries of the news in a group, we can summarize the news group in a similar way.

Missing facts

We call each sentence in a news summary “a fact”. Now we can obtain the difference between a news summary and the group summary, and the sentences existing in the group summary but not in the news summary are the missing facts of the report. Otherwise, the sentences existing in the news summary but not in the group summary is the exclusive content of the news report.

Application

島民衛星 https://islander.cc/

Source Classifier for Daily News and Farm Post

Introduction

Sometimes the news media are not neutral as expected. Hence, we can train a satisfactory classifier due to the biases. Or to say, the classifier will work since a news medium might have its specific writing style. We use BERT[2] as the contexual representation extractor to train a classifier in order to predict the source (news medium) of a news report given its content (or title). Except for the daily news, this kind of classifier can also be adopted to classify the source of farm posts, which are usually biased and contain fake information.

Data

- Daily news

- # classes: 4

- 台灣四大報(中國時報、聯合報、自由時報、蘋果日報)

- Preprocessing

- Sometimes the reporter name, the news medium itself, or some slogans are in the raw content. We filter them using hand-crafted rules.

- Farm post

- # classes: 16

- mission-tw, qiqu.live, qiqu.world, hssszn, qiqi.today, cnba.live, 77s.today, i77.today, nooho.net, hellotw, qiqu.pro, taiwan-politicalnews, readthis.one, twgreatdaily.live, taiwan.cn, itaiwannews.cn

Method

C-512

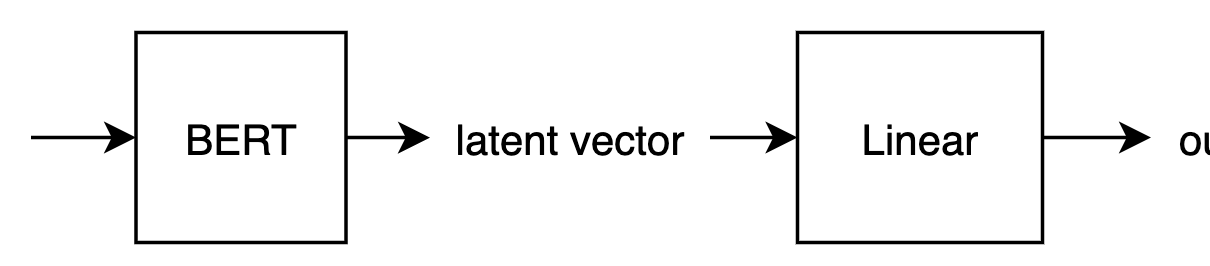

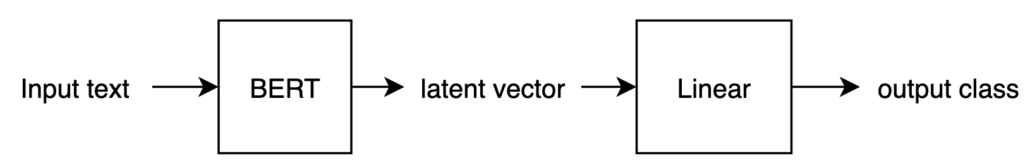

- As shown in the figure below, this classifier is composed of a BERT model followed by a linear layer. This model can handle the input whose content length is less than 512. Note that the latent vector is corresponding to the CLS token.

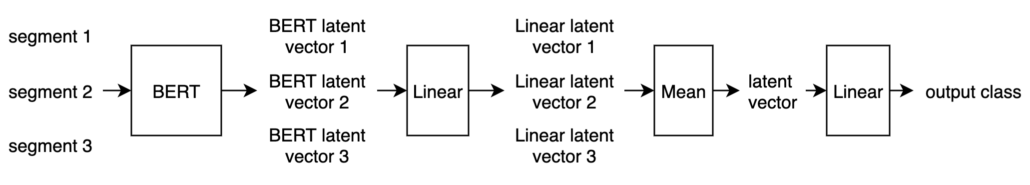

C-whole

- Since the pretrained BERT model is for the cases with less-than-512 texts, we propose a method to deal the cases with more-than-512 texts.

- First, we train a C-512 model. The C-whole model uses the BERT module in the trained C-512 model as its representation extractor. Given an input content with arbitary text length, ll, we split it into ⌈l/512⌉⌈l/512⌉ segments. The representation for each segment is derived by the BERT module, and then it is passed through a linear layer. Averaging all these outputs from the linear layer, the vector is fed into another linear layer to obtain the final output of the C-whole model.

Application

島民衛星 https://islander.cc/

1. Cer, Daniel, et al. “Universal sentence encoder.” arXiv preprint arXiv:1803.11175 (2018). ↩︎

2. Jacob Devlin, et al. “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding” arXiv preprint arXiv:1810.04805 (2018). ↩︎