AI Jazz Bass Player: Bass Accompaniment in A Jazz Piano Trio Setting

November 2018 marks the debut of Yating, an AI Pianist that learns to compose and perform keyboard-style music by means of the AI technology we are developing here at the Taiwan AI Labs. Instead of playing pre-existing music, Yating listens to you and composes original piano music on-the-fly in response to the affective cues found in your voice input. This is done with a combination of our technology in automatic speech recognition, affective computing, human-computer interaction, and automatic music composition. In November 2018 Yating gave a public concert as her debut at the Taiwan Social Innovation Lab (社會創新實驗中心) in Taipei (see the trailer here; in Mandarin). Now, you can download the App we developed (both iOS and Android versions are available) to listen to the piano music Yating creates for you any time through your smartphone.

Yating keeps growing her skillset since that. One of the most important skills we want Yating to have is the ability to create original multi-track music, i.e., a music piece that is composed of multiple instruments. Unlike the previous case of composing keyboard-only, single-instrument music, composing multi-track music demands consideration of the relationship among the multiple tracks/instruments that are involved in the piece of music. Each track must sound “right” on its own, and collectively the tracks interact with one another closely and unfold over time interdependently.

We begin with the so-called Jazz Piano Trio setting, which is composed of a pianist playing the melody and chord, a double bass player playing the bass, and a drummer that plays the drums. We find this setting interesting, because it involves a reasonable number of tracks with different roles, and because it’s a direct extension of the previous piano-only setting. Our goal here is therefore to learn to compose original music with these three tracks in the style of Jazz.

We share with this blog post how Yating learns to play the part of the bass player. We may talk about the parts of the pianist and drummer in the near future.

Specifically, we consider the case of bass accopmaniment over some given chords and rhythm. This can be understood as the case where the pianist plays only the chord (but not the melody), the drummer plays the rhythm, and the bass player has to compose the bassline over the provided harmonic and rhythmic progression. In this case, we only have to compose music of one specific track, but while composing the track we need to take into account the interdependence among all the three tracks.

We use an in-house collection of 1,500+ eight-bar phrases segmented from MIDI files of Jazz piano trio to train a deep recurrent neural network to do this. For I/O, we use pretty_MIDI (link).

You can first listen to a few examples of the bass this model composes given human-composed chords and rhythm.

You can find below a video demonstrating a music piece our in-house musicians created in collaboration with this AI bass player.

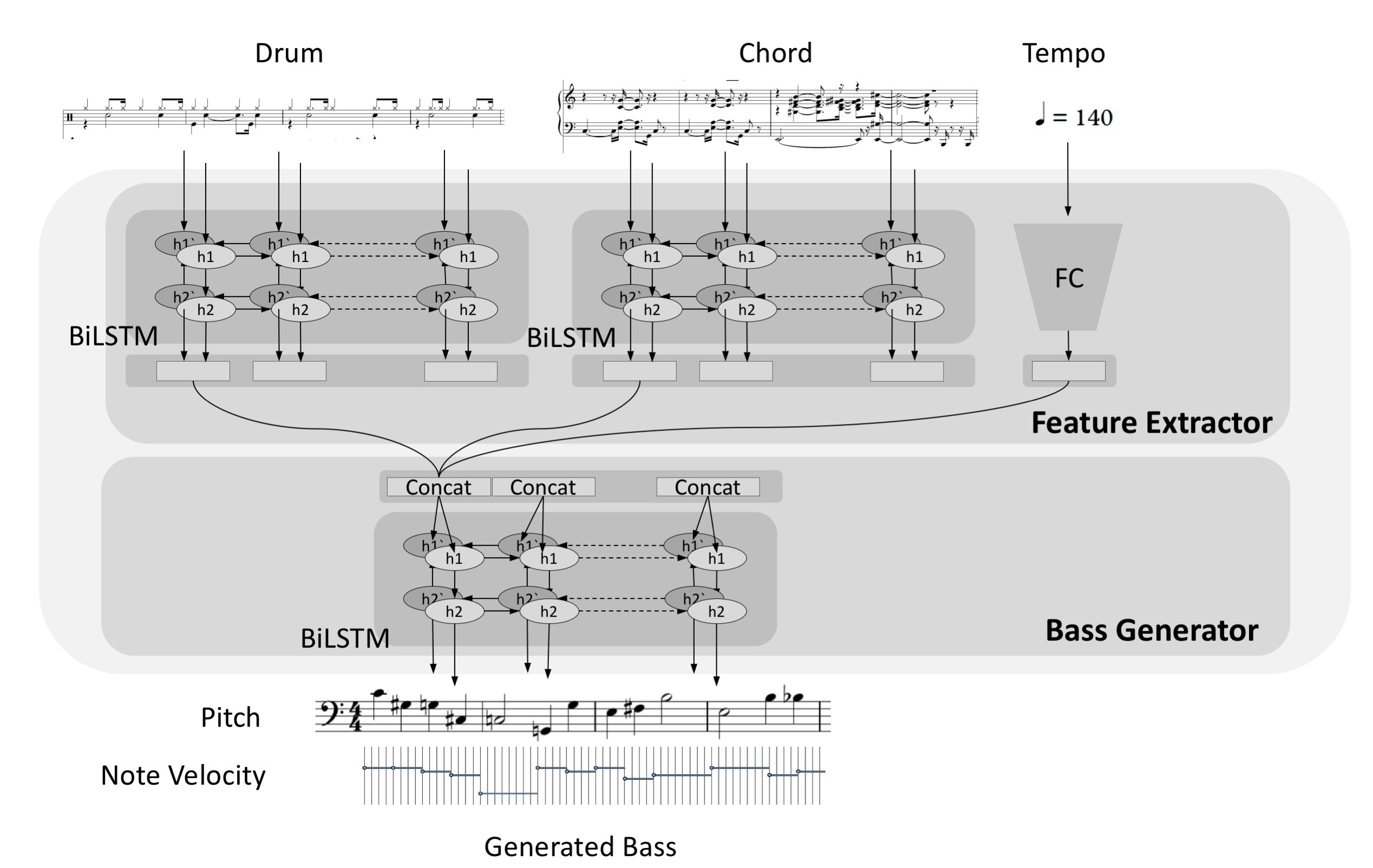

The architecture of our bass composition neural network is shown in the figure below. It can be considered as a many-to-many recurrent network. The input to the model comprises a chord progression and a drum pattern, both of eight bars, and the intended tempo of the music. The target output of the model is a bass solo of eight bars long as well, comprising the pitch and velocity (which is related to the loudness) associated with each note.

The drum pattern and chord sequence are processed by separate stacks of two recurrent layers of bidirectional long short-term memory (BiLSTM) units.

The input drum pattern is represented by a sequence of eight 16-dimensional vectors, one vector for each bar. Each element in the vector represents the activity of drums for each 16th beat of the bar, calculated mainly by counting the number of active drums for that 16th beat over the following nine drums: kick drum, snare drum, closed/open hi-hat, low/mid/high toms, crash cymbal, and ride cymbal. We weigh the kick drum a bit more to differentiate it from the other drums. The output of the last BiLSTM layer of the drum branch is another sequence of eight K1-dimensional vectors, again one vector for each bar. Here, K1 denotes the number of hidden units of the last BiLSTM layer of the drum branch.

The input chord progression, on the other hand, is represented by a sequence of thirty-two 24-dimensional vectors, one vector for each beat. We use a higher temporal resolution here to reflect the fact that the chord may change every beat (while the rhythm may be more often perceived at the bar level). Each vector is composed of two parts, a 12-dimensional multi-hot “pitch class profile” (PCP) vector representing the activity of the twelve pitch classes (C, C#, …, B, in a chromatic scale) in that beat, and another 12-dimensional one-hot vector marking the pitch class of the bass note (not the root note) in that chord. The output of the last BiLSTM layer of the chord branch is a sequence of thirty-two K2-dimensional vectors, again one vector for each beat. Here, K2 denotes the number of hidden units of the last BiLSTM layer of the chord branch.

The tempo of the 8-bar segment, which is available from the MIDI file, is represented as a 35-dimensional one-hot vector after quantizing (non-uniformly) the tempo into 35 bins (the choice of the number of bins is made quite arbitrary). The vector is used as the input to a fully-connected layer to get an K3-dimensional vector representing the tempo information for the whole segment.

Compared to drum and chords, we use an even finer temporal resolution for the bass generator (the second half of the bass composition model shown in the figure above): we aim to generate the bass for every 16th beat. The input of the bass generator is therefore a sequence of 128 (K1+K2+K3)-dimensional vectors, one vector for each 16th beat. Each vector is obtained by concatenating the output from the drum branch, chord branch, and tempo branch of the corresponding 16th beat. The bar generator is implemented again by two stacks of BiLSTM layers. From the output of the last BiLSTM layer, we aim to generate a 39-dimensional one-hot vector representing the pitch and a 14-dimensional one-hot vector representing the velocity used by the bass for that 16th beat. Here, the pitch vector is 39 dimensional because we consider 37 pitches (from MIDI number 28-64, which corresponds to the pitch range of double bass) plus one rest token and one “repeat-the-note” token. The velocity vector is 14 dimensional because we quantize (non-uniformly) the velocity value (which is originally from 0 to 128) to 14 bins. Because the model has to predict both the pitch and velocity of the bass, it can be said that model is doing multi-task learning.

After training the model with tens of epochs, we find that it can start to generate some reasonable bass, but the pitch contour is sometimes too fragmented. It might be possible to further improve the result by collecting more training data, but we decide to apply some simple postprocessing rules based on some music knowledge. We are in general happy with the current result: the bass fits with the drum pattern nicely and has pleasant grooving.

You can listen to more music we generated below.

This is just the beginning of Yating’s journey in learning to compose multi-track music. The bass accompaniment model itself can be further improved, but for now we’d like to move on and have fun learning to compose the melody, chords, and drum in the setting of Jazz piano trio.

By: Yin-Cheng Yeh, Chung-Yang Wang, Yi-Pai Liang, and Yi-Hsuan Yang (Taiwan AI Labs Yating/Music Team)