Virtual Aerial Tour

Written By: Yi-Chun Kuo, Hao-Kai Wen

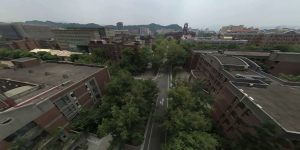

Chi Po-Lin’s Beyond Beauty: Taiwan from Above brought a new perspective on our familiar island. With this spirit, we architect a smart city service, targeting to explore the surroundings of our daily life from a unique angle. To develop a prototype, we fly drones over the campus of National Taiwan University, and Anping District, Tainan City to record the street view videos with 360 camera, and build an aerial tour application. 360 camera provides flexible viewing angles for processing the videos. In this way, we only need to fly over a road once, and we can present various flying experiences. Eco-House, NTU Library, or Drunken Moon Lake may be familiar to you, but have you ever seen them from the above? Please be invited to visit the campus of NTU in the drone’s eye view

https://smartcity.ailabs.tw/aerial-tour/ntu/.

Text-to-speech attraction introduction

We integrate AI Labs’s cool factors into our service. With text-to-speech, a virtual tour guide vividly introduces the attractions to users. Videos with adjusted color, tone, brightness and contrast present a more beautiful scene to users. Drawing-style videos show a distinctive city view to visitors. Below let us introduce the methodology behind the scene.

style transferred Anping scene

Shaky videos is an issue for our platform. The instability of drone footage are due to the vibration of propellers. We applied Kopf’s [1] method to estimate camera poses between frames. Once we have the camera positions, we can offset the shaking and the videos are stabilized.

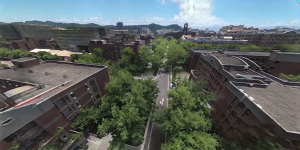

Color inconsistency between videos is another issue. Because the videos were taken on different days, the light and weather conditions differ a lot. Abrupt color change in the scene makes users uncomfortable. Many trials have been made to overcome this problem. Style transfer approaches fail because of non-photorealistic results. The most convincing method is [2] to our audience. Use deep features to match semantically similar regions in images, and transfer the color of corresponding regions accordingly. That is to say, the color of tree is transferred to tree and the color of the car is transferred to the car. In this way, the videos taken on different days keep consistent color.

left: original image, right: color transferred image

In addition to color transfer, sky replacement algorithm, such as [3], helps reduce the color inconsistency between videos. If the sky are the same between videos, human will assume the videos are shot in the same day. We developed a sky replacement algorithm to replace the cloudy sky with a clear sky. First we use semantic segmentation models to detect the coarse skyline, and use matting algorithm to refine the details. Then, the sequence of 360 camera positions is built as 360 video stabilization. We rotate the sky image with the help of these camera positions, to simulate a new sunny sky. At the end, we composite the new sky with original videos to generate appealing street view videos.

up: original image, down: sky replaced image

This is the stage we are now toward building an aerial tour service. We keep exploring an interesting and innovative method to bring a unique perspective on our beautiful home.

Reference

[1] Kopf, Johannes. “360 video stabilization.” ACM Transactions on Graphics (TOG) 35.6 (2016): 195. [link]

[2] Liao, Jing, et al. “Visual attribute transfer through deep image analogy.” arXiv preprint arXiv:1705.01088 (2017). [link]

[3] Tsai, Yi-Hsuan, et al. “Sky is not the limit: semantic-aware sky replacement.” ACM Trans. Graph. 35.4 (2016): 149-1. [link]