360° Depth Estimation

360° videos provide an immersive environment for people to engage, and Taiwan Traveler is a smart online tourism platform that utilizes 360° panoramic views to realize virtual sightseeing experiences. To immerse users in the virtual world, we aim to exploit depth information to provide a sense of space, enabling tourists to better explore scenic attractions.

Introduction

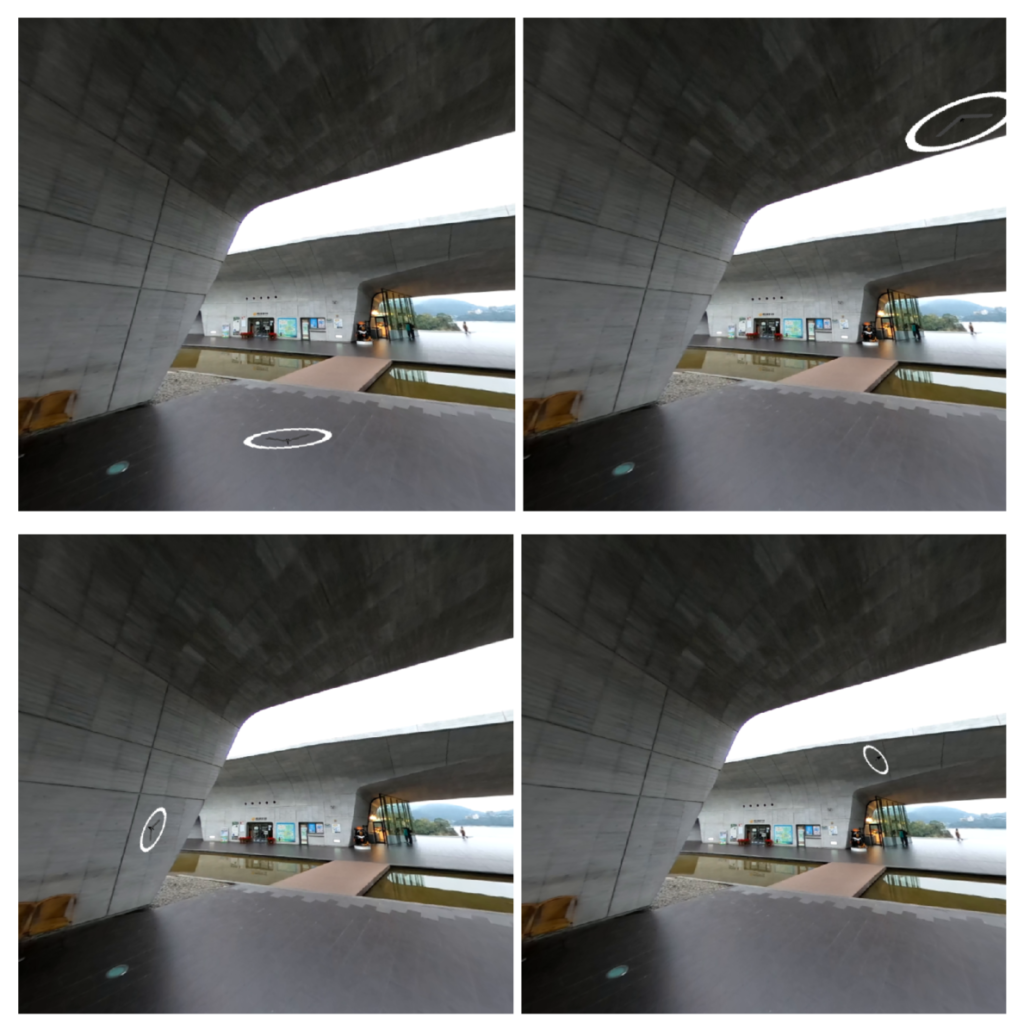

Depth plays a pivotal role in helping users to perceive 3D space from 2D images. With the depth information, it is possible to provide users with more cues about the sense of 3D space via 2D perspective images. For example, a disparity map can be derived from the depth map, and it is possible to create stereo vision. Also, the surface normals can be inferred by the depth map for better shape understanding. By warping the cursor according to depth and surface normals, users can experience the scene geometry better through hovering over the images.

Depth estimation of perspective images is a well-studied task in computer vision, and deep learning has significantly improved its accuracy. Trained with large datasets containing ground-truth depth information, the estimation models learn to predict the 3D scene geometry by merely using 2D images. However, the depth estimation of 360° images is knotty. The estimation model’s ability is often limited to indoor environments because of the lack of datasets for outdoor scenes. In addition, the distortion on an equirectangular image makes the problem difficult to tackle with convolution neural networks. Thus, predicting accurate 360° depth maps from images is still a challenging task.

In this project, given a single 360° image, we aim at estimating a 360° depth map, by which we can calculate the surface normals, allowing users to hover the scenes by moving mouses over images. To obtain depth maps that can achieve a satisfactory user experience, we utilize single-image depth estimation models designed for perspective images. For adapting these models to 360° images, our method blends depth information from different sampled perspective views to output a spatially consistent 360° depth map.

Existing Methods

Monocular depth estimation refers to the task that predicts scene depth from a single image. Recently, deep learning has been exploited to cope with this challenging task and has shown compelling results. Through a large amount of data, the neural network can learn to infer per-pixel depth value, thus constructing a complete depth map.

Currently, 360° depth estimation is more mature for indoor scenes than outdoor ones. There are a few reasons. First, it is more challenging to collect ground-truth depth for 360° images, and most existing models rely on synthetic data of indoor scenes. Second, current models often leverage structures and prior knowledge of indoor scenes. Our online intelligent tour system contains a large number of outdoor scenic attractions, along with indoor scenes. Thus, existing models for indoor settings do not apply to our applications.

Our Method

In order to take advantage of the more mature monocular depth estimation for perspective images, our method first converts equirectangular images to perspective images. A common way is to project a spherical 360° image onto a six-face cubemap. Each face of the cubemap represents a part of the 360° image through projection. After converting a 360° image to several perspective images, a depth estimation model for perspective images, such as the one proposed by Ranftl et al. [1] can be applied. These models often extract features from these NFOV (normal-field-of-view) images and predict depth maps.

After obtaining the depth maps for NFOV images, the next step is to fuse those depth maps into a 360° depth map. For the fusion task, we have to deal with the following issues.

1. Objects across different NFOV images

When projecting the 360° spherical image onto different tangent planes, some objects could be divided into parts. The segmented parts can not be recognized well by the estimation model and could be predicted with inaccurate depth. Besides, the same objects across different planes could lead to depth inconsistency when assembling the parts, leading to apparent seams.

2. Depth scale

In a perspective depth map, though the relative depth relationship between objects is roughly correct, the depth gradient may be drastically large, e.g., a decoration on a wall or a surfboard on the water. The distinct color and texture between nearby objects would cause dramatically different depth values even if they are almost on the same plane.

Their depth scales could be different between multiple perspective depth maps, making it difficult to fuse them to a 360° depth map. Though we can adjust their depth scales to match neighboring images globally by overlapping areas, it’s challenging to adjust objects’ depth values locally. In addition, a depth map is adjacent to multiple depth maps. Thus, we need to solve for globally optimal scaling factors.

3. Wrong estimation of vertical faces

Most of the training data for the depth estimation model are captured from normal viewing angles. The datasets lack images looking towards the top (e.g., sky and ceiling) or bottom (e.g., ground and floor) of the scenes. Thus, the learned models often cannot learn to predict the accurate depth maps for those views. Usually, the surrounding area of a horizontal perspective image is closer to the camera, while the center area is often deeper than other regions. In contrast, the center area of top/bottom views is often closer to the camera, and other regions are farther away. Thus, depth estimation of the top/bottom views is often less accurate as the wrong prior is used.

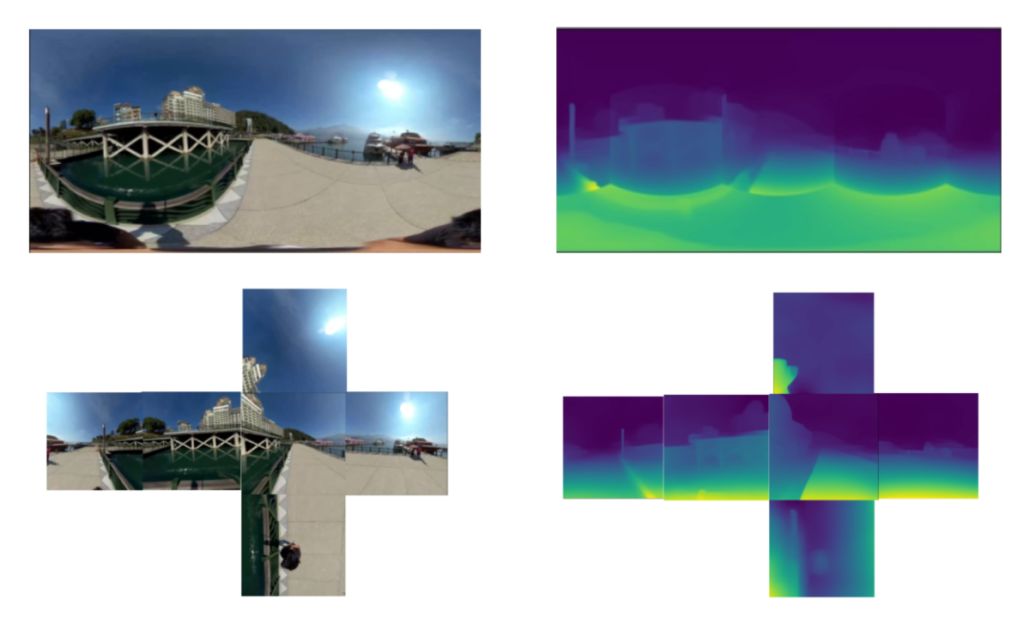

Figure 1: top-left: 360° image, bottom-left: cubemap images, bottom-right: cubemap depth maps, top-right: fused 360° depth map with seams.

Due to the aforementioned issues, a 360° depth map often suffers from apparent seams along the boundaries of depth maps after fusion. Our method first converts 360° images to cubemaps with a FOV (field-of-view) larger than 90 degrees. It guarantees the overlaps between adjacent faces. After that, the estimation model predicts the depth map for each image. We then project each perspective depth map to a spherical surface and apply equirectangular projection for manipulating them on a two-dimensional plane. To avoid dramatic change between depth maps, we adjust their values according to the overlapping area. Then we apply Poisson Blending [2] to compose them in the gradient domain. The depth gradients guide the values around the boundaries and propagate inside, retaining the relative depth and eliminating seams. Therefore, the depth values smoothly change across depth maps.

Additionally, we utilize different strategies to divide a 360° image into several perspective images. Apart from the standard cubemap projection, our method utilizes two approaches to divide images and combine perspective depth maps.

The first approach is to use a polyhedron to approximate a spherical image. When projecting a 360° image to a tangent plane, the peripheral regions are distorted, affecting the depth estimation, especially when FOV is large. By projecting the spherical surface to multiple faces (more than 20 faces), we better approximate a sphere with a polyhedron to obtain less distorted features. Nevertheless, adopting appropriate criteria to select and blend those depth maps is crucial. When having more depth maps to blend, adjusting their depth scales becomes difficult. Also, seamlessly blending images creates gradual depth change along the boundary, acting like a smoothing operation. With more blending iterations for the depth maps, smoothing all the boundaries between them generates blurring artifacts. Choosing the proper part of depth maps and designing the process to fuse them is essential.

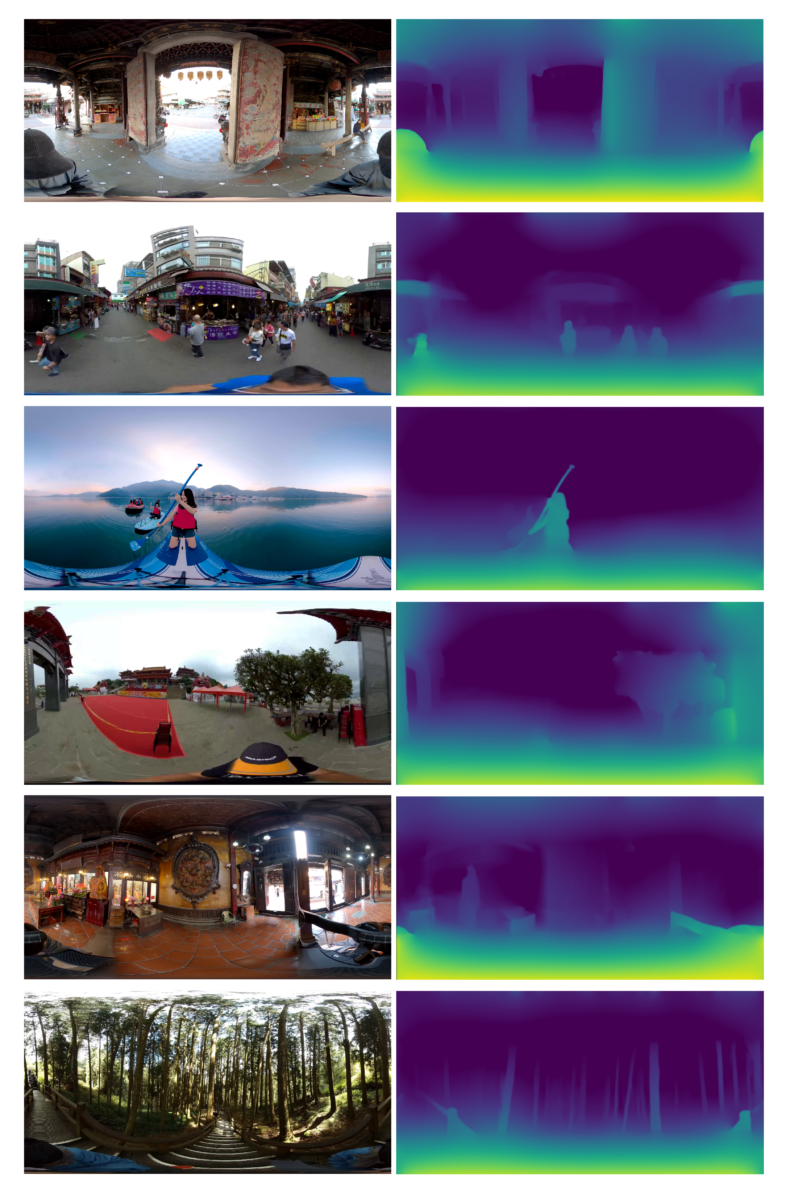

The second approach is opposite to the previous one, using fewer depth maps to blend. As mentioned earlier, the estimation model cannot tackle the vertical faces on the top and bottom. Therefore, even if the boundary between vertical and horizontal faces is smooth, the predicted vertical depth maps’ gradient still contradicts our perception of real-world space. As such, we only predict the depth maps of horizontal perspective images and exploit them to generate a 360° depth map. We expand their FOV to obtain more information and increase overlapping. Besides, the vertical perspective region is naturally sky, roof, and floor, etc. Utilizing the assumption that it’s often a smooth area without texture and depth change, we fill the areas with a smooth gradient field and blend them into the horizontal areas. Since the vertical region, the high latitude area of an equirectangular map, only corresponds to a small area on the sphere, this artificial filled-up region doesn’t harm the 360° depth map much. Instead, it creates better depth maps for the hovering experience. This method naturally fuses horizontal depth maps and vertical smooth fields to generate a spatial-consistent 360° depth map and allow users to explore the three-dimensional space.

Figure 2: Left: Equirectangular image, Right: Equirectangular depth map generated from horizontal perspective images of the left image.

Except for the method mentioned above, we discuss the next step of 360° depth estimation. Taiwan Traveler uses 360° videos to create virtual tours, and it is possible to exploit the temporal information conveyed in videos to have a more accurate depth estimation. Adjacent frames taken from different camera views comply with geometry constraints. Thus, we could exploit a neural network to estimate the camera motion, object motion, and scene depth simultaneously. With this information, we can calculate the pixel reprojection error as supervision signals to train the estimation model. This self-supervised learning framework can tackle the problem of lacking 360° outdoor depth map datasets and has proved its feasibility for depth estimation from perspective videos. As a result, unsupervised depth estimation from 360° videos is worth researching in the future.

Conclusion

We exploit deep learning’s capability and design a process to fuse perspective depth maps into a 360° depth map. We tackle the current limitation of 360° scene depth estimation and construct scene geometry for a better sense of space. This method could benefit the online 360° virtual tours and elevate users’ experience of perceiving space in the virtual environment.

Figure 3: Users can hover over the image for exploring the scene, with the cursor icon warped according to the depth and surface normal at the pixel underneath the cursor.

Reference

[1] Ranftl, R., Lasinger, K., Hafner, D., Schindler, K., & Koltun, V. (2019). Towards robust monocular depth estimation: Mixing datasets for zero-shot cross-dataset transfer. arXiv preprint arXiv:1907.01341.

[2] Pérez, P., Gangnet, M., & Blake, A. (2003). Poisson image editing. In ACM SIGGRAPH 2003 Papers (pp. 313-318).