Hovering Around a Large Scene with Neural Radiance Field

Video 1: Hovering around A LI Mountain with neural radiance field

Introduction

Neural Radiance Field (NeRF) [1] has been a popular topic in computer vision since 2020. By modeling the volumetric scene function with a neural network, NeRF achieves state-of-the-art results for novel view synthesis.

While NeRF-related methods are popular in academia, they have not been widely implemented on products to provide user experiences. This article aims to demonstrate how Neural Radiance Field can be used to create an immersive experience for users hovering around a large attraction sight.

Background

Neural Radiance Field [1] is an avant-garde way of predicting novel views from existing images. While traditional 3D reconstruction methods estimate a 3D representation of the scene using meshes or grids, NeRF overfits a neural network to a single scene and determines how every 3D point looks from a novel viewpoint in that scene. Through ray tracing and L2 image construction loss, the model tries to predict the color and volume density of every point in the scene from several training images of the scene with known camera poses.

Since the publication of NeRF, there have been several follow-up studies. By rendering conical frustums instead of rays, Mip-NeRF [2] eliminates aliasing without supersampling. Through modeling the far-scene differently from the near-scene, Mip-NeRF 360 [3] and NeRF++ [4] achieve better visual results in the “background scenes”. Via storing features in local scenes, Instant-NGP [5] and Point-NeRF [6] allow the models to encode large scenes and converge quickly during training. By combining multiple neural radiance fields, Block-NeRF [7] allows the models to encode even larger scenes such as an entire neighborhood of San Francisco.

Improving NeRF for Encoding Large Scenes

The first step towards encoding a large attraction sight in a neural radiance field is choosing a model structure suitable for our use case. Despite NeRF’s great performance on small 360-degree scenes, encoding large complex scenes in NeRF is not feasible due to its simple MLP encoding method. Also, Mip-NeRF 360 and NeRF++ do not allow users to hover too far around the scene since the far scene is encoded differently than the near scene. Finally, although Block-NeRF is capable of modeling large scenes well, it also takes a considerable amount of time and computing power to train. On the other hand, by storing trainable local features in hash tables and treating the near and far parts of a scene in the same way, Instant-NeRF can create a large neural radiance field where users can hover freely. As a result, in this project, we will exploit Instant-NGP’s method of storing local features in the scene. Also, we utilize COLMAP [8] to compute camera poses for input images. However, to encode an attraction site and let users hover around, we still need to get enough viewpoints in training data and remove dynamic objects.

Getting Enough Viewpoints in Training Data

Video2: Instant-NeRF does not work well on extrapolating colors from unseen angles

Video3: Our method improves image quality from a variety of viewpoints

Unlike traditional 3D reconstruction methods, neural radiance fields allow objects to appear in different colors from different angles. However, Instant-NeRF does not work well on extrapolating colors from unseen angles (see video 2). Thus, to encode a scene in Instant-NeRF, we need a filming strategy that can allow our model to see from a variety of angles.

Traditionally, Instant-NeRF assumes all training images point to a common focus. However, we found that this kind of filming strategy works best for encoding objects, but not large scenes. When filming a large scene, we may not always have a visible common focus across images. Also, we may need more flexible techniques for encoding large complex scenes since they often contain more complex objects and occluded areas.

To get enough different angles, we develope a new filming method for filming perspective input images. To elaborate, we both circle the scene to film 360° inward videos and films from different heights such that the model has enough information to predict color from different angles. We then sample the video 2 frames per second to ensure COLMAP gets enough common features to compute camera poses.

In addition, our system supports the input of 360° videos. Traditionally, Instant-NeRF and COLMAP support only perspective input data. To the best of our knowledge, we are the first to use 360° videos in training Instant-NeRF. In general, one won’t consider forward-walking 360° videos suitable for Instant-NeRF training since they lack common focus even if there is no occluded space. However, we found that a 360° video can lead to great results for encoding a large scene since it satisfies two conditions: COLMAP has enough common features to match among frames, and Instant-NeRF has a wide variety of training data for interpolating the color for every point in the space. When utilizing 360° videos from Taiwan Traveler, we first convert the sampled panoramic view into perspective images. A common way to do this is to project a spherical 360° image onto a six-face cube map. We found that COLMAP can accurately estimate the camera pose of cube map images. As a result, we can convert equirectangular images to the format that Instant-NeRF supports and produce high-quality results. Furthermore, we provide the option to dump the vertical images in outdoor scenes since they usually contain little information about the scene and could potentially spoil the model with misleading camera poses. With 360° videos, we found that we can get better results with easier filming techniques.

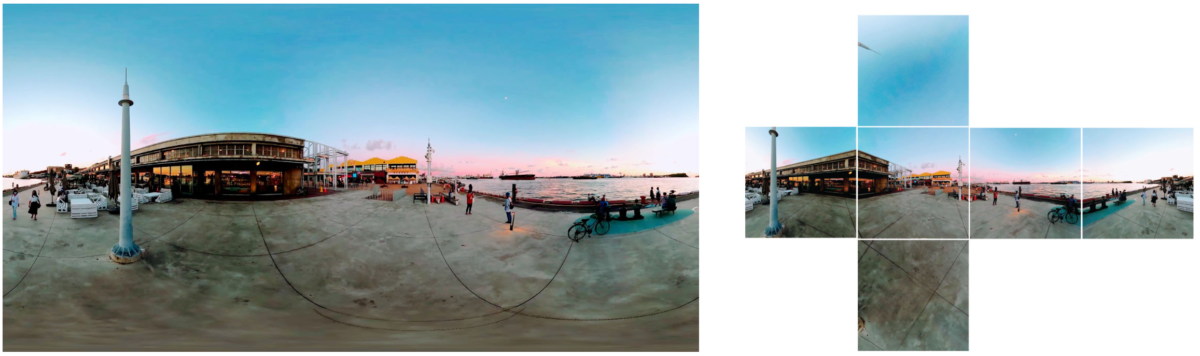

Picture 1: Converting a 360° equirectangular image (left) to a cube map (right). Vertical images in the cube map (the up and down ones) usually contain less information about the scene

Picture 2: Perspective (left) and 360° (right) filming methods, with the green pyramids being camera positions

Dynamic Object Removal

When encoding a popular site, chances are there will be many people or cars moving around in the scene. Moving objects could be a challenge to Instant-NeRF and COLMAP because both assume input data to be static.

To tackle this issue, we utilized a pre-trained image segmentation model, DeeplabV3 [9], to mask popular moving objects such as people and cars. Following our previous work [10], we can also obtain masks of cameramen. Then, we ignore those masked objects both when extracting features for computing camera poses and ray tracing during training Instant-NeRF.

Picture 3: Masking popular dynamic objects with DeeplabV3

Applications

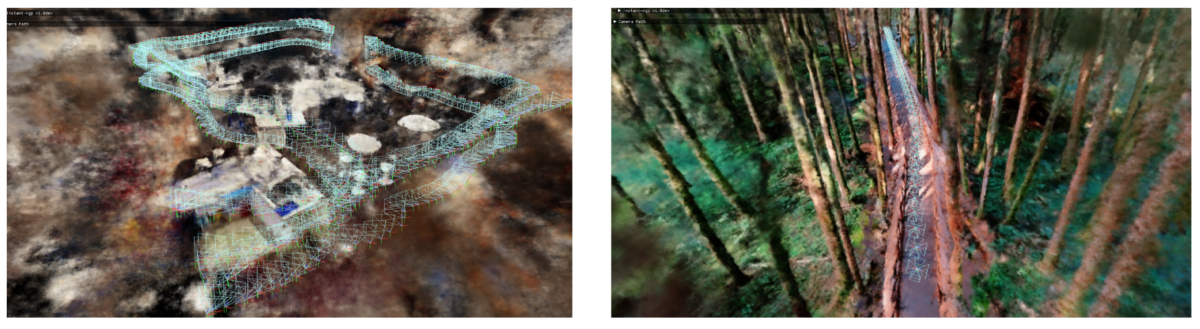

With the ability to edit camera paths after filming videos, directors can now create many novel videos with different camera paths based on only one set of training images. We integrate Potree[11], an open-source WebGL point cloud viewer, and Instant-NeRF to develop a studio that allows creators to edit desired camera movements. To elaborate, after encoding the whole tourist site, Potree visualizes the sparse points output by COLMAP so that creators can assign camera paths and produce immersive hovering-around videos using the studio.

Video6, 7: Assign the camera path in Potree (up) and then render a video with novel views (down)

To implement Instant-NeRF for a real-time, interactive experience, we deploy Instant-NeRF on a local device with high GPU memory. Moreover, we can allow users to fly around scenes immersively by combining human pose estimation.

Conclusion

In this article, we demonstrate how to encode large scenes in neural radiance fields and let users edit camera paths afterward or fly around the field interactively. We achieve it by developing a pipeline that can transfer both 360-degree and perspective videos into a new video with novel paths. We also provide guidelines on filming techniques for encoding a large scene and tackle common issues when implementing Neural Radiance Field in the field such as path assignment and dynamic object removal.

Video8~11: Hovering around famous tourist attractions in Taiwan with Instant-NeRF. From top to bottom, they are results of Kaohsiung Pier-2 Art Center, Xiangshan Visitor Center, Taipei Main Station, and Sun Moon Lake

Reference

- Ben Mildenhall and Pratul P. Srinivasan and Matthew Tancik and Jonathan T. Barron and Ravi Ramamoorthi and Ren Ng. (2020). NeRF: Representing Scenes as Neural Radiance Fields for View Synthesis. ECCV 2020

- Jonathan T. Barron, Ben Mildenhall, Matthew Tancik, Peter Hedman, Ricardo Martin-Brualla, and Pratul P. Srinivasan. (2021). Mip-NeRF: A Multiscale Representation for Anti-Aliasing Neural Radiance Fields. CVPR 2021

- Jonathan T. Barron and Ben Mildenhall and Dor Verbin and Pratul P. Srinivasan and Peter Hedman (2022). Mip-NeRF 360: Unbounded Anti-Aliased Neural Radiance Fields. CVPR 2022

- Kai Zhang, Gernot Riegler, Noah Snavely, Vladlen Koltun (2021). NeRF ++: Analyzing and Improving Neural Radiance Fields. arXiv:2010.07492

- Thomas Muller, Alex Evans, Christoph Schied, and Alexander Keller (2022). Instant Neural Graphics Primitives with a Multiresolution Hash Encoding. ACM Trans. Graph. July 2022

- Qiangeng Xu, Zexiang Xu, Julien Philip, Sai Bi, Zhixin Shu, Kalyan Sunkavalli, Ulrich Neumann (2022). Point-NeRF: Point-based Neural Radiance Fields. CVPR 2022

- Matthew Tancik, Vincent Casser, Xinchen Yan, Sabeek Pradhan, Ben Mildenhall, Pratul P. Srinivasan, Jonathan T. Barron, Henrik Kretzschmar (2022). Block-NeRF: Scalable Large Scene Neural View Synthesis. CVPR 2022

- Schonberger, Johannes Lutz and Frahm, Jan-Michael. (2016) Structure-from-Motion Revisited. CVPR 2016

- Liang-Chieh Chen and Yukun Zhu and George Papandreou and Florian Schroff and Hartwig Adam. (2018) Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. ECCV 2018

- Taiwan AI Labs. The Magic to Disappear Cameraman: Removing Object from 8K 360° Videos (2021)

- Potree, WebGL point cloud viewer for large datasets, at potree.org